Summary

The SAM-Guide project aims to help visually impaired people (VIP) interact with the world around them by creating smart devices that convert visuo-spatial information into sounds and vibrations they can feel and hear. Instead of trying to replace vision entirely, the project focuses on giving people the specific spatial information they need for everyday tasks like finding objects, navigating spaces, and even participating in sports activities. Teams of researchers from multiple French universities are developing wearable devices like vibrating belts and audio systems that can guide users toward targets or help them understand their surroundings. They’re testing these technologies through virtual reality games and real-world activities, including a new sport called laser-run designed for VIP. The project’s ultimate goal is to create a common “language” of sounds and vibrations that can help VIP gain more independence in various activities, from simple daily tasks to recreational sports.

1) I was a major actor behind the birth of this project, by connecting the consortium members together and writing most of the grant proposal (ANR AAPG 2021, funding of 609k€).

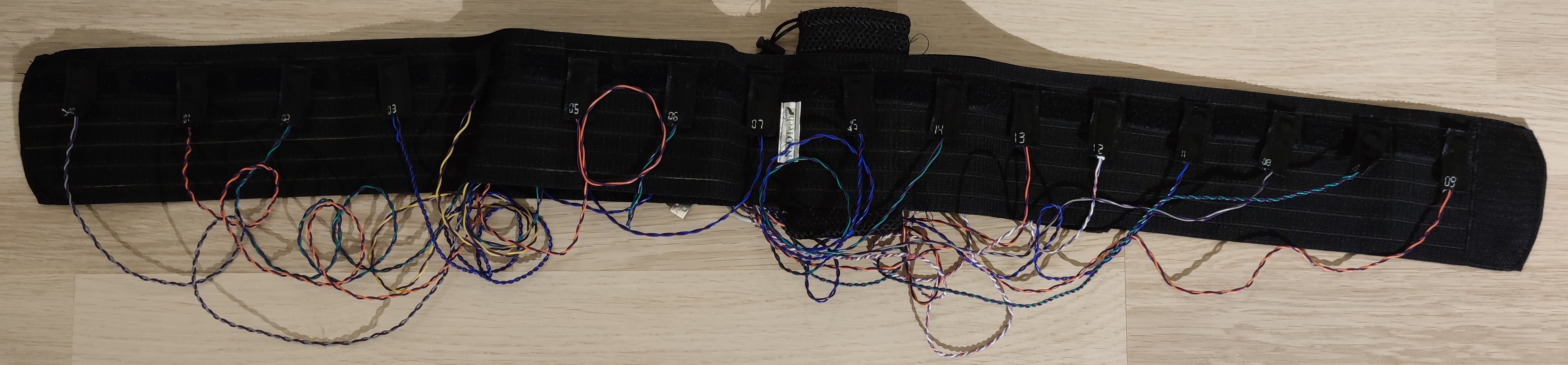

2) I designed and participated in the development of the second prototype of our vibro-tactile belt, which features wireless communication and detachable vibrators (C++/Arduino/ESP32):

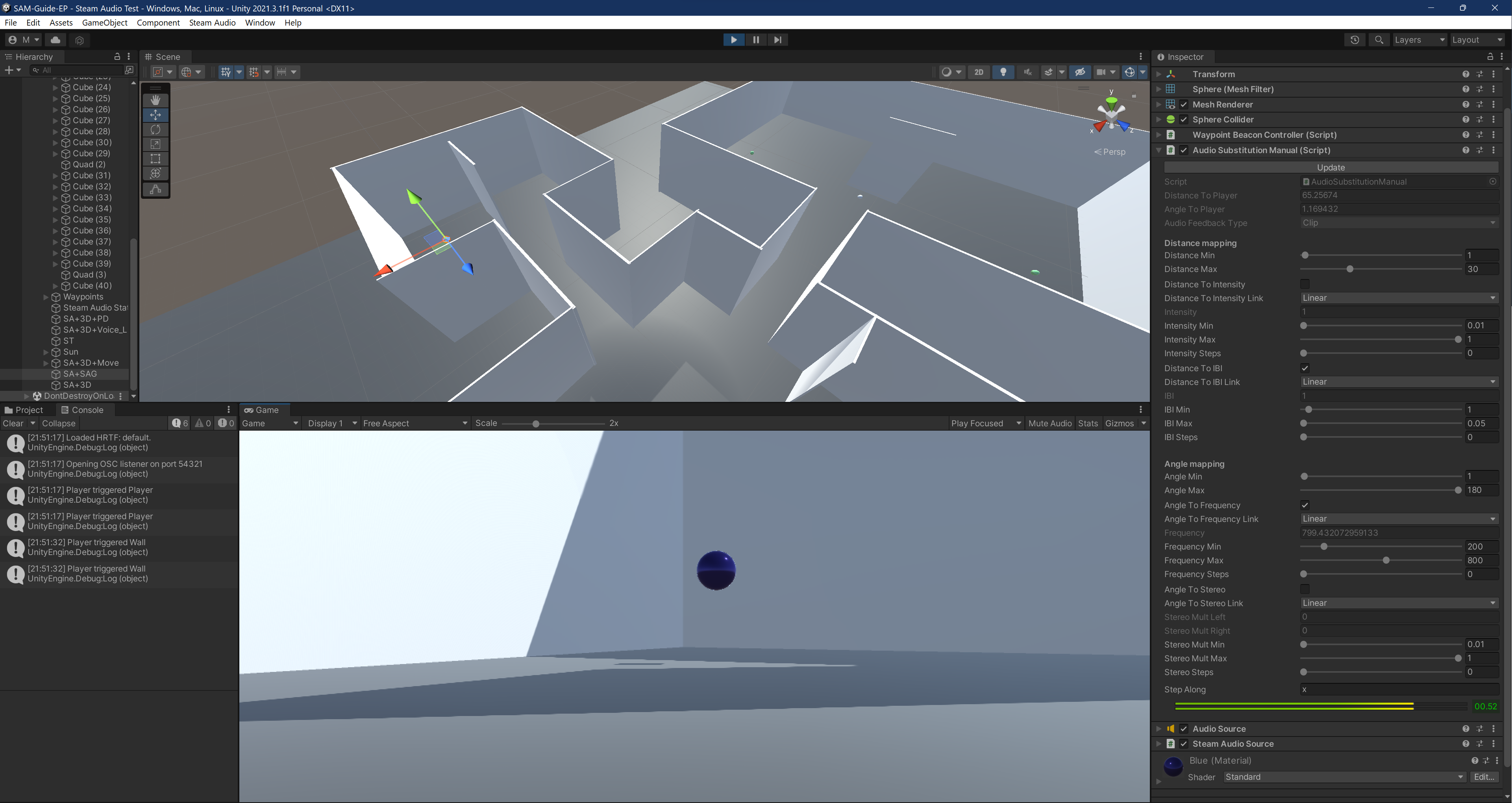

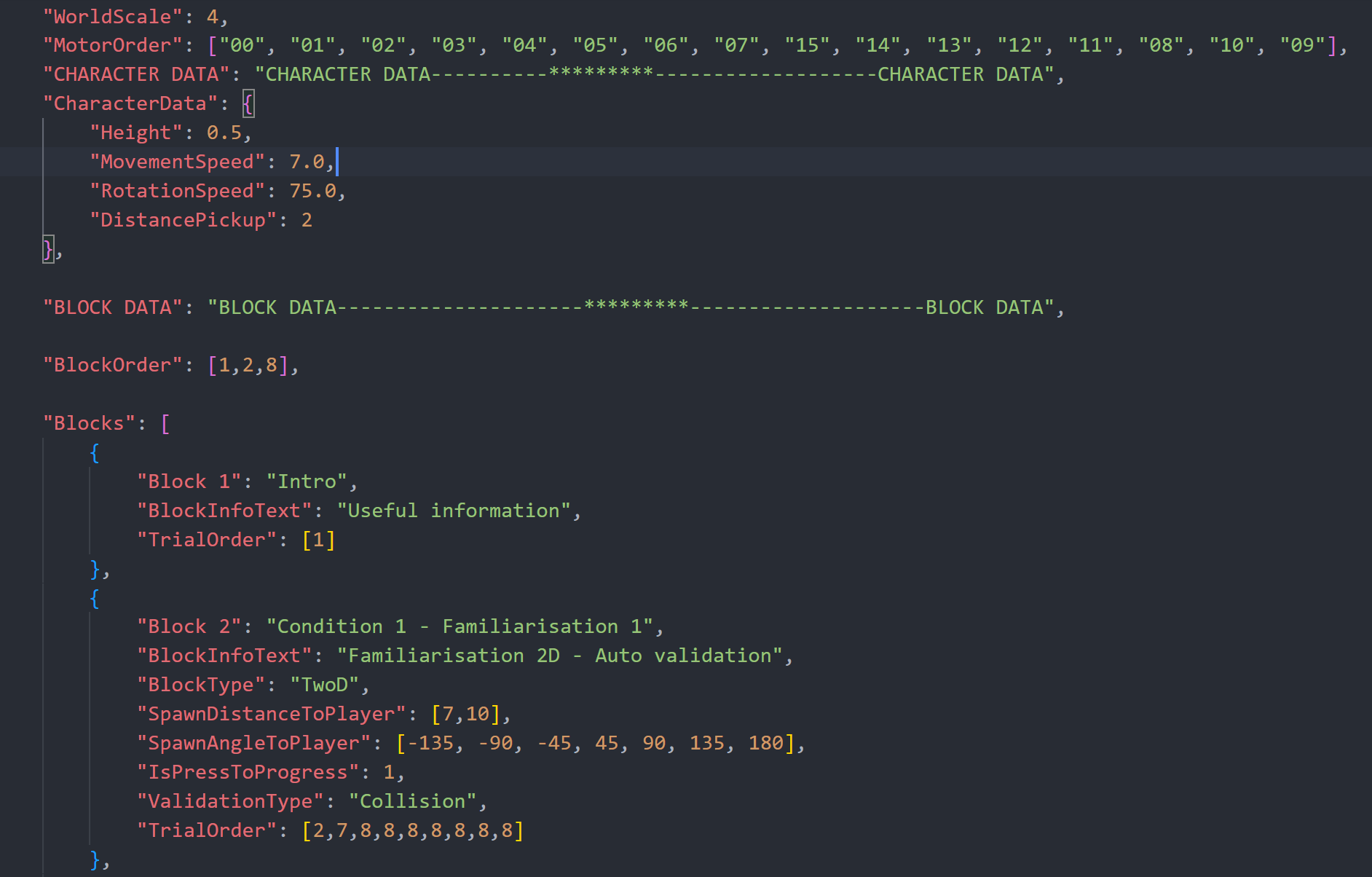

3) I lead the design and development of the project’s experimental platform (C#/Unity). The platform uses Unity, connects to various motion tracking devices used by the consortium (Polhemus, VICON, pozyx), uses PureData for sound-wave generation and Steam Audio for 3D audio modeling, and communicates with the consortium’s non-visual interfaces wirelessly.

This platform allows one to easily spin up experimental trials by specifying the desired characteristics in a JSON file (based on the OpenMaze project). Unity will automatically generate the trial’s environment according to those specifications and populate it with the relevant items (e.g. a tactile-signal emitting beacon signalling a target to reach in a maze), handle the transition between successive trials and blocks of trials, and log all the relevant user metrics into a data file.

4) Handled the experimental design of the first wave of experiments using the TactiBelt for “blind” navigation.

5) Built the first version of the SAM-Guide’s website, using Quarto and hosted on GitHub Pages (code).