1 Introduction

To Be Filled

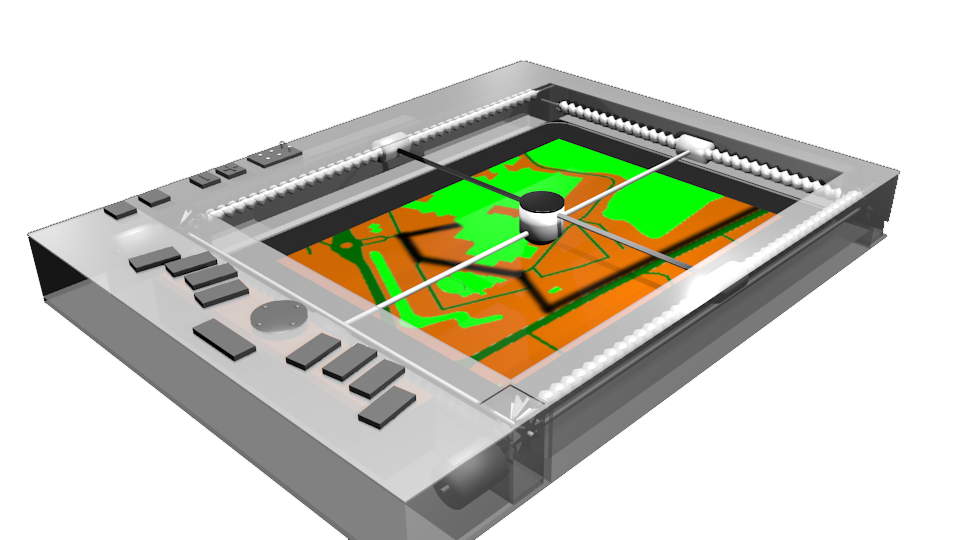

1.1 Our interface: F2T (v2)

During this project, we improved upon the first iteration of the Force Feedback Tablet (F2T) from the TETMOST project to design the finalized prototype of this interface:

2 Outcomes

1) We developed a Java application to create or convert images into simplified tactile representations, which can then be explored using the F2T:

2) We investigated and developed tools to automatically generate a navigation graph from a floor plan, which can then be converted into a tactile image and explored with the F2T:

3 My role in this project

1) Participated in the development of a Java app to control the F2T and display tactile “images”.

2) Helped design the first round of experimental evaluations, where participants where tasked with recognizing and re-drawing simple geometrical shapes, as well as the layout of a simple mock apartment.

3) Wrote a first-author conference article and a poster.