1 Introduction

Interacting with space is a constant challenge for Visually Impaired People (VIP) since spatial information in Humans is typically provided by vision. Sensory Substitution Devices (SSDs) have been promising Human-Machine Interfaces (HMI) to assist VIP. They re-code missing visual information as stimuli for other sensory channels. Our project redirects somehow from SSD’s initial ambition for a single universal integrated device that would replace the whole sense organ, towards common encoding schemes for multiple applications.

SAM-Guide will search for the most natural way to give online access to geometric variables that are necessary to achieve a range of tasks without eyes. Defining such encoding schemes requires selecting a crucial set of geometrical variables, and building efficient and comfortable auditory and/or tactile signals to represent them. We propose to concentrate on action-perception loops representing target-reaching affordances, where spatial properties are defined as ego-centered deviations from selected beacons.

The same grammar of cues could better help VIP to get autonomy along with a range of vital or leisure activities. Among such activities, the consortium has advances in orienting and navigating, object locating and reaching, laser shooting. Based on current neurocognitive models of human action-perception and spatial cognition, the design of the encoding schemes will lay on common theoretical principles: parsimony (minimum yet sufficient information for a task), congruency (leverage existing sensorimotor control laws), and multimodality (redundant or complementary signals across modalities). To ensure an efficient collaboration all partners will develop and evaluate their transcoding schemes based on common principles, methodology, and tools. An inclusive user-centered “living-lab” approach will ensure constant adequacy of our solutions with VIP’s needs.

Five labs (three campuses) comprising ergonomists, neuroscientists, engineers, and mathematicians, united by their interest and experience with designing assistive devices for VIP, will duplicate, combine and share their pre-existing SSDs prototypes: a vibrotactile navigation belt, an audio-spatialized virtual guide for jogging, and an object-reaching sonic pointer. Using those prototypes, they will iteratively evaluate and improve their transcoding schemes in a 3-phase approach: First, in controlled experimental settings through augmented-reality serious games in motion capture (virtual prototyping indeed facilitates the creation of ad-hoc environments, and gaming eases the participants’ engagement). Next, spatial interaction subtasks will be progressively combined and tested in wider and more ecological indoor and outdoor environments. Finally, SAM-Guide’s system will be fully transitioned to real-world conditions through a friendly sporting event of laser-run, a novel handi-sport, which will involve each subtask.

SAM-Guide will develop action-perception and spatial cognition theories relevant to non-visual interfaces. It will provide guidelines for the efficient representation of spatial interactions to facilitate the emergence of spatial awareness in a task-oriented perspective. Our portable modular transcoding libraries are independent of hardware consideration. The principled experimental platform offered by AR games will be a tool for evaluating VIP spatial cognition, and novel strategies for mobility training.

2 My role in this project

1) I was a major actor behind the birth of this project, by connecting the consortium members together and writing most of the grant proposal (ANR AAPG 2021, funding of 609k€). This project will last 4 years and allow the recruitment of 2 PhD students, one post-doc, and one Research Engineer.

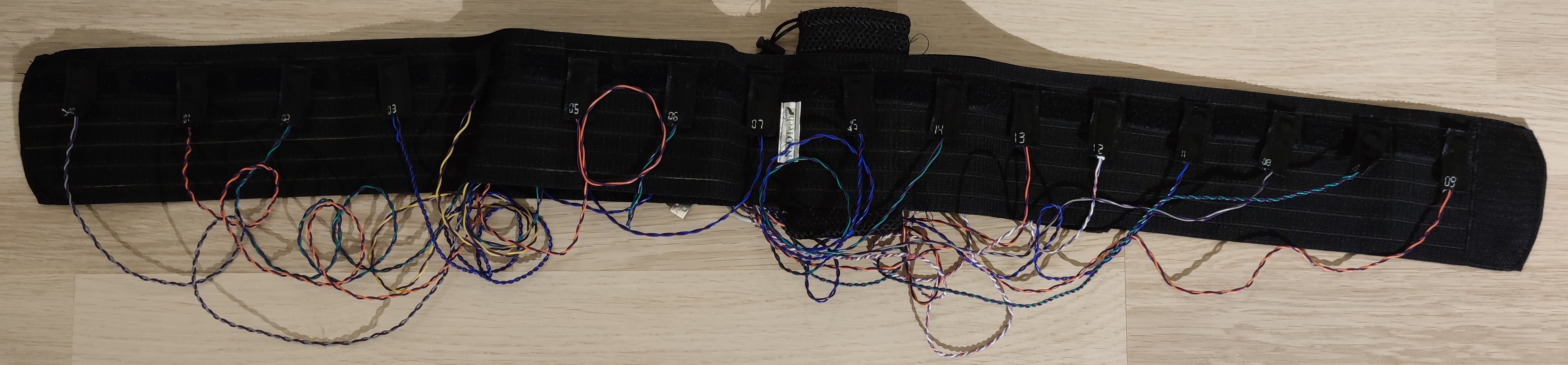

2) I designed and participated in the development of the second prototype of our vibro-tactile belt, which features wireless communication (thanks to an ESP32 module) and amovible vibrators:

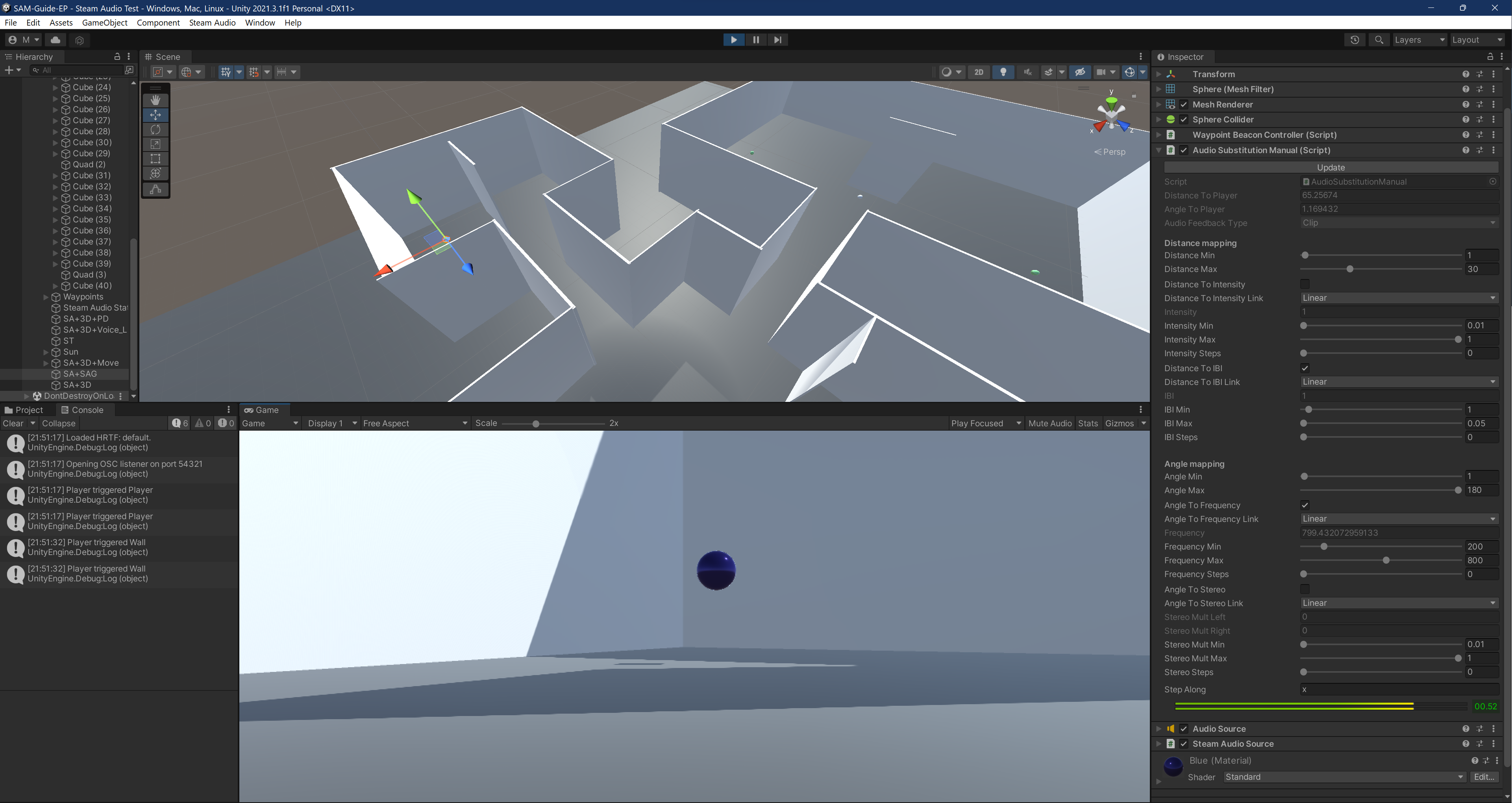

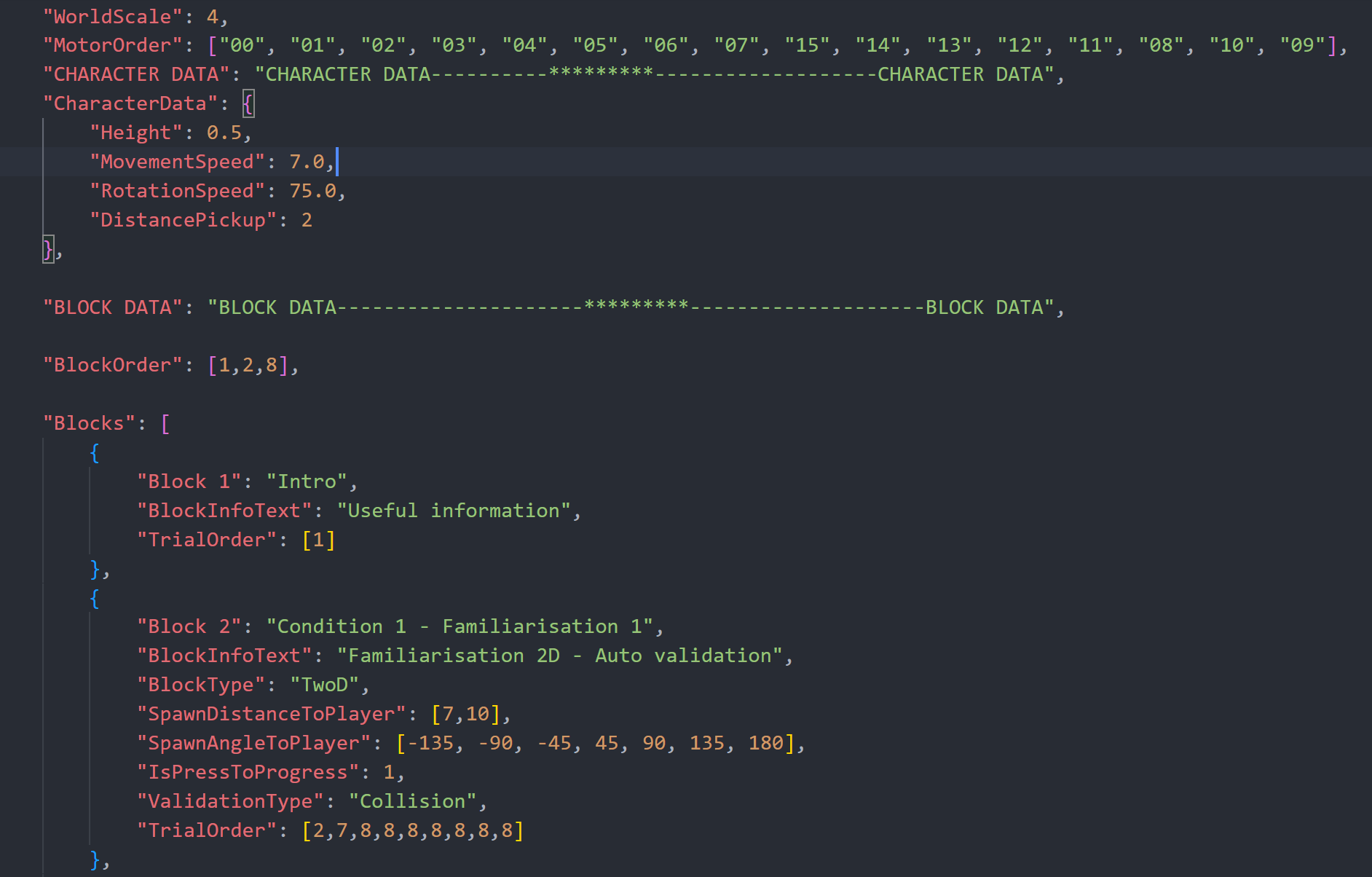

3) I lead the design and development of the project’s experimental platform. The platform uses Unity, connects to various motion tracking devices used by the consortium (Polhemus, VICON, pozyx), uses PureData for sound-wave generation and Steam Audio for 3D audio modeling, and communicates with the consortium’s non-visual interfaces wirelessly.

This platform allows one to easily spin up experimental trials by specifying the desired characteristics in a JSON file (based on the OpenMaze project). Unity will automatically generate the trial’s environment according to those specifications and populate it with the relevant items (e.g. a tactile-signal emitting beacon signalling a target to reach in a maze), handle the transition between successive trials and blocks of trials, and log all the relevant user metrics into a data file.

4) Handled the experimental design of the first wave of experiments using the TactiBelt for “blind” navigation.

5) Designed the project’s website using Quarto.