1 Introduction

2 My role in this project

1) Explore new solutions to improve the localisation & tracking capabilities of CamIO:

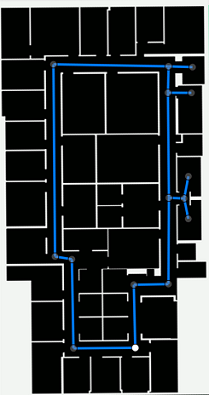

Their existing solution, iLocalize (Fusco & Coughlan, 2018) (Swift / iOS), used a combination of Visuo-Inertial Odometry (VIO) through Apple’s ARKit, particle filtering based on a simplified map of the environment, and drift-correction through visual identification of known landmarks (using a gradient boosting algorithm).

I developed a web app to send the live camera stream from a mobile phone (JavaScript / socket.io) to a backend server (Python / Flask). The goal of the application was to facilitate the exploration of new Computer Vision algorithms to process the captured video and IMU data, which would send back location or navigational information.

I also explored existing 3rd-party services for indoor localization, such as Indoor Atlas (which combines VIO, GPS data, WiFi & geomagnetic fingerprinting, dead-reckoning, and barometric readings for altitude changes), for which I made a small demo.

2) Assist in analyzing the data and writing a scientific paper presenting the project.