1 Introduction

AdViS aims to explore various ways to provide visuo-spatial information through auditory feedback, based on the Sensory Substitution framework. Since its inception, the project investigated visuo-auditive substitution possibilities for multiple tasks in which vision plays a crucial role:

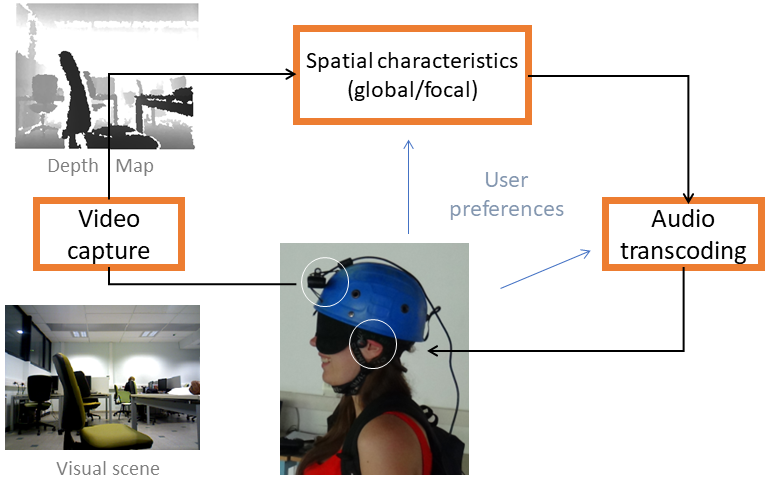

- Navigating a small maze using a depth-map-to-auditory-cues transcoding

- Finger-guided image exploration (on a touch screen)

- Eye-movement guided image exploration (on a turned off computer screen)

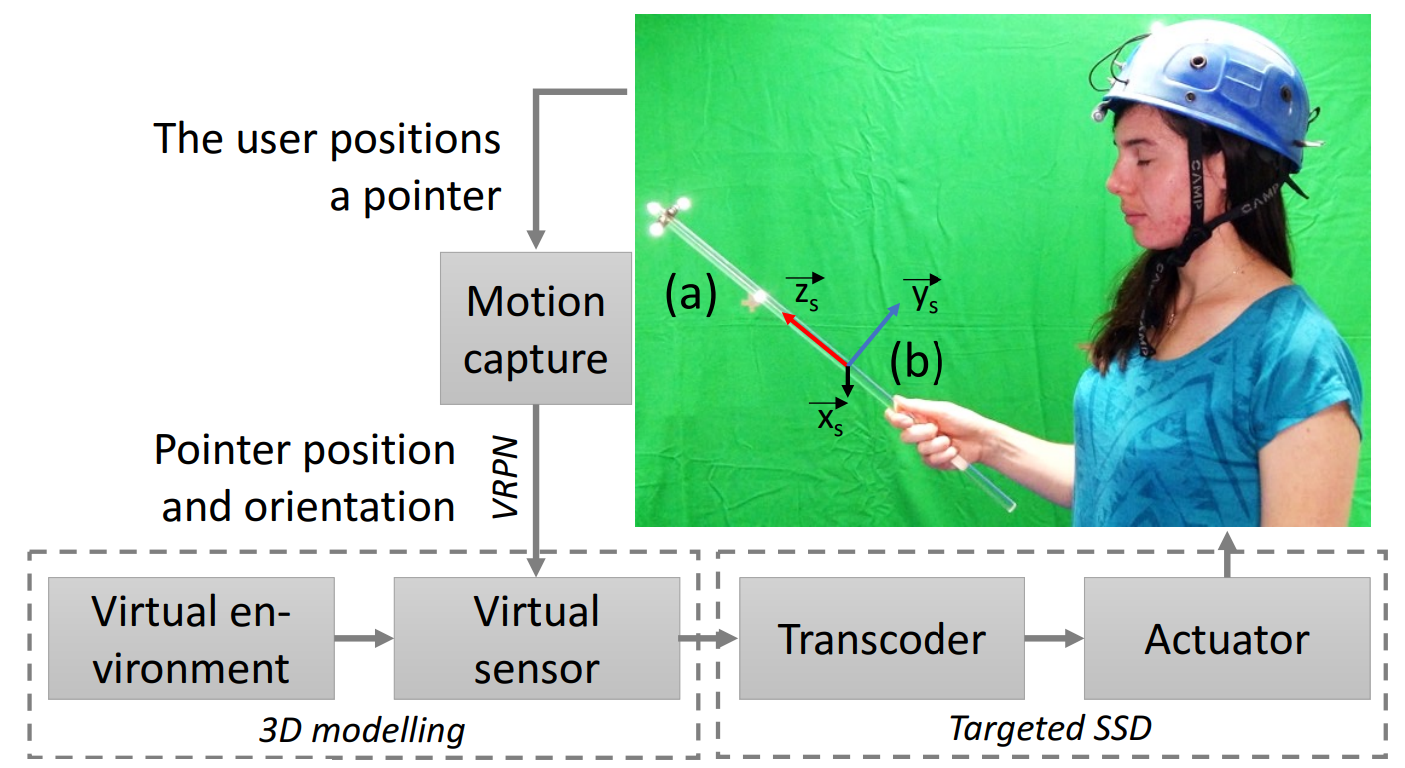

- Pointing towards and reaching a virtual target in 3D space using a motion capture environment

The AdViS system is coded in C++, uses PureData for complex sound generation, and relies on the VICON system to track participant’s movements in an augmented reality environment.

2 My role in this project

1) Proposed a new model for image exploration relying on a touch-mediated visuo-auditive feedback loop, where a VIP explores an image by moving its finger across a screen and gets audio feedback based on the contents of the explored region.

2) Modified the existing AdViS system to include the ability to transcode grey-scale images into soundscapes, based on captured finger-motion information on a touchscreen.

3) Organized experimental evaluations with blindfolded students, tasked with recognizing geometrical shapes on a touchscreen, and analyzed the results.

4) Participated in implementing an occular-motion-to-audio-feedback loop in order to evaluate the possibility of exploring images (on a turned off screen) with eye-movements (which are still controllable by most of the non-congenital VIP).